Publications

Highlights

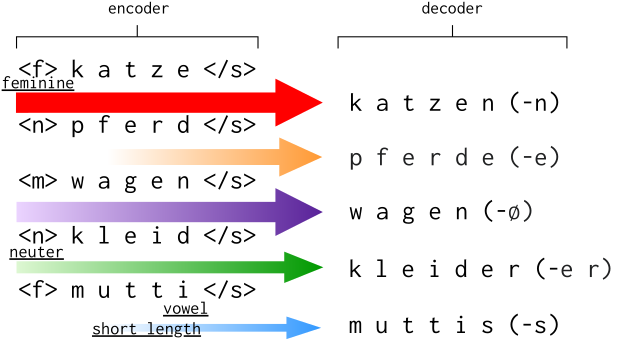

Inflectional morphology has since long been a useful testing ground for broader questions about generalisation in language and the viability of neural network models as cognitive models of language. Here, in line with that tradition, we explore how recurrent neural networks acquire the complex German plural system and reflect upon how their strategy compares to human generalisation and rule-based models of this system. We perform analyses including behavioural experiments, diagnostic classification, representation analysis and causal interventions, suggesting that the models rely on features that are also key predictors in rule-based models of German plurals. However, the models also display shortcut learning, which is crucial to overcome in search of more cognitively plausible generalisation behaviour.

Verna Dankers, Anna Langedijk, Kate McCurdy, Adina Williams, Dieuwke Hupkes

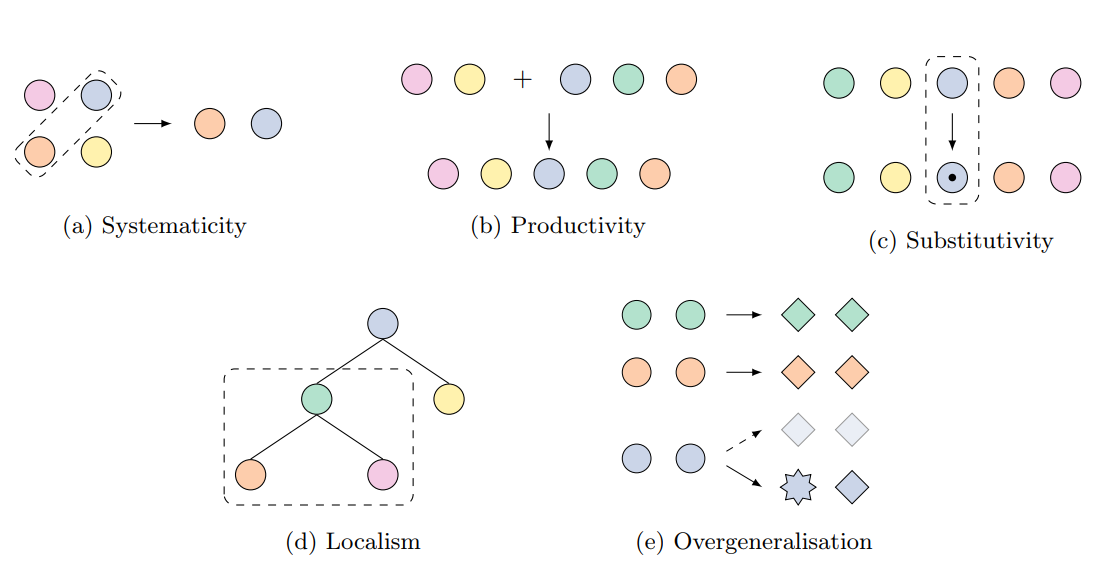

The compositionality of neural networks has been a hot topic recently, on which no consensus exists. In this paper, we argue that this in part stems from the fact that there is little consensus on what it means for a network to be compositional, and we present a set of five tests that provide a bridge between the linguistic and philosophical knowledge about compositionality and deep neural networks. We use our tests to evaluate a recurrent model, a convolution-based model and a Transformer, and provide an in depth analysis of the results.

Dieuwke Hupkes, Verna Dankers, Mathijs Mul and Elia Bruni

Full List

The paradox of the compositionality of natural language: a neural machine translation case study.

Verna Dankers, Elia Bruni and Dieuwke Hupkes •

Generalising to German Plural Noun Classes, from the Perspective of a Recurrent Neural Network

Verna Dankers, Anna Langedijk, Kate McCurdy, Adina Williams, Dieuwke Hupkes • CoNLL, Best Paper Award

Attention vs Non-attention for a Shapley-based Explanation Method

Tom Kersten, Hugh Mee Wong, Jaap Jumelet and Dieuwke Hupkes • DeeLIO 2021

Co-evolution of language and agents in referential games

Gautier Dagan, Dieuwke Hupkes and Elia Bruni • EACL 2021

Language modelling as a multi-task problem

Lucas Weber, Jaap Jumelet, Elia Bruni and Dieuwke Hupkes • EACL 2021

Internal and External Pressures on Language Emergence: Least Effort, Object Constancy and Frequency.

Diana Rodriguez Luna, Edoardo Ponti, Dieuwke Hupkes and Elia Bruni • EMNLP-Findings

The grammar of emergent languages

Oskar van der wal, Silvan de Boer, Elia Bruni and Dieuwke Hupkes • EMNLP 2020

Compositionality decomposed: how do neural networks generalise?

Dieuwke Hupkes, Verna Dankers, Mathijs Mul and Elia Bruni • Journal of Artificial Intelligence Research

Location Attention for Extrapolation to Longer Sequences

Yann Dubois, Gautier Dagan, Dieuwke Hupkes, Elia Bruni • ACL 2020

Internal and External Pressures on Language Emergence: Least Effort, Object Constancy and Frequency.

Diana Rodríguez Luna, Edoardo Maria Ponti, Dieuwke Hupkes and Elia Bruni •

Analysing neural language models: contextual decomposition reveals default reasoning in number and gender assignment

Jaap Jumelet, Willem Zuidema and Dieuwke Hupkes • CONLL 2019

Mastering emergent language: learning to guide in simulated navigation

Mathijs Mul, Diane Bouchacourt and Elia Bruni •

Assessing incrementality in sequence-to-sequence models

Dennis Ulmer, Dieuwke Hupkes and Eia Bruni • Repl4NLP, ACL 2019

Transcoding compositionally: using attention to find more generalizable solutions

Kris Korrel, Dieuwke Hupkes, Verna Dankers and Elia Bruni • BlackboxNLP, ACL 2019

On the Realization of Compositionality in Neural Networks

Joris Baan, Jana Leible, Mitja Nikolaus, David Rau, Dennis Ulmer, Tim Baumgärtner, Dieuwke Hupkes and Elia Bruni • BlackboxNLP, ACL 2019

The Fast and the Flexible: training neural networks to learn to follow instructions from small data

Rezka Leonandya, Elia Bruni, Dieuwke Hupkes and German Kruszewski • IWCS 2019

Learning compositionally through attentive guidance

Dieuwke Hupkes, Anand Singh, Kris Korrel, German Kruszewski and Elia Bruni • CICLing 2019

Do language models understand anything? On the ability of LSTMs to understand negative polarity items

Jaap Jumelet and Dieuwke Hupkes • BlackboxNLP, EMNLP 2018